Bigdata Testing – What to do?

“All code is guilty, until proven innocent.” – Anonymous

This blog is first in the series of blogs that would be focused on illustrating what should be kept in mind while doing Bigdata testing.

Why is testing so important?

Testing is an integral part of the software development life cycle. It ensures that the software is working as per the specifications and is of high quality. Testing is required for an effective performance of software application or product.

Testing in the Bigdata world is even more complicated due to the size and variety of data.

Why is Bigdata testing complex?

The 3 Vs of Bigdata that makes it so powerful, also makes Bigdata Testing very complex:

Volume: volume of data is huge so it makes it next to impossible to test the entire data

Variety: Sources can have data coming in different formats – Structured, Semi structured and unstructured.

Velocity: Rate of ingestion can vary with type of source (batch, real-time, near real time etc.) With the need to ingest data at real time, replicating this kind of testing scenarios is challenging.

Technology Landscape: There are a number of open source technologies present in the market for data ingestion, processing and analytics. This increases the learning curve for the tester and also increases the time and effort required for testing.

Bigdata testing Methodology

Bigdata testing can be primarily divided into 3 areas:

- Data validation testing (Preprocessing)

- Business logic validation testing (Processing)

- Output validation testing (Consumption)

This blog talks about the different things that should be tested in the data validation testing phase.

Data Validation Testing

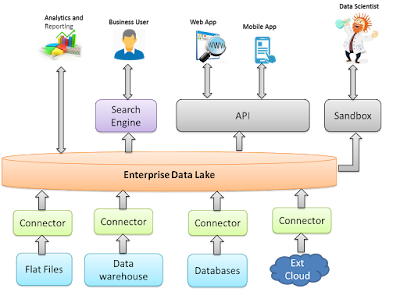

Data validation testing ensures that right data has been ingested into the system. It consists of testing the data ingestion pipeline and the data storage.

Data Ingestion

Prominent Bigdata technologies used for Data ingestion are Flume, Sqoop, Spark, Kafka, Nifi etc.

Multi-Source Integration – There is a need to integrate data from multiple sources into the ingestion pipeline. These sources would have different volumes of data getting generated at different times.

Things to test:

- What is the mechanism of data ingestion from each source? (Data is getting pulled or pushed from each source)

- What is the format in which data is received from each source?

- Has input been checked for consistency with a minimum/maximum range?

- Is the data secure while getting transferred from source to target?

- Who has access to the source systems (RDBMS tables or SFTP folders)?

- Are the files (In case of SFTP) getting archived or purged after copy, based on the pre decided frequency?

- Are audit logs getting generated?

- Are ingestion error logs getting generated?

Integrity Check – Data integrity can be checked via multiple methods like record count, file size, checksum validation etc.

Things to test:

- What is the size of the file transferred?

- The number of columns in the source and target are matching?

- Is the sequence of columns correct?

- Are the column data types matching?

- Is the record count matching for both source and target?

- Are the checksums for source and target matching?

Change data Capture (CDC) – For incremental data load all the scenarios of insert, update and delete of records/fields need to be tested.

Things to test:

- Are the new records getting inserted?

- Are the records deleted at the source side marked as delete at target?

- Are new versions getting created for the updated records (if we are maintaining history else are they getting overwritten with latest version)?

- Are audit logs getting generated?

- Are error logs getting generated?

Data Quality – Quality of data is one of the most important aspects of data ingestion. Data quality rules are defined by functional SMEs after discussion with business users. These rules are specific to the source system.

Things to test:

- Are any of the key fields Null?

- Are there any duplicate records?

- Have all the data quality rules being applied?

- Is the total number of good and bad records matching the number of records ingested from each source?

- Are the good records getting passed on to the next layer?

- Are the bad records getting stored for future reference?

- Can the bad records be traced back to the source?

- Can the reason of bad records be traced?

- Can we trace the good and bad records to the respective data loads?

- Can the good and bad records be cleaned up for a particular load?

- Are error/Audit logs getting generated?

Data Storage

Data ingested from multiple sources can be stored in premise or in cloud based on the deployment model chosen:

In Premise - Hadoop Distributed Filesystem, S3 or NoSQL data store.

Cloud based – S3, Glacier, HDFS, NoSQL, RDS etc.

Things to test:

Common across storage:

- What is the file format of the ingested data?

- Is the data getting compressed as per the decided compression format?

- Is the integrity of data maintained after the compression?

- Is the data getting archived to correct location?

- Is the data getting archived at the decided time frame?

- Is the data getting purged at the decided time frame?

- Is the data stored in file system or NoSQL accessible?

- Is the archived data accessible

Simple Storage Service (S3):

- Is there a requirement to encrypt data in S3? If yes, is the data getting encrypted correctly?

- Is there a requirement for versioning data in S3? If yes is data getting versioned?

- Are rules set to move data to RRS (Reduced Redundancy Storage) or Glacier? Is the data moving according to the policy?

- Is all the access to buckets tested as per bucket policy and ACLs that have been set?

- Is the data in S3 encrypted?

- Can S3 data be accessed from the nodes in the cluster?

How to Test?

The most common way to test data is the following:

- Data Comparison – Simplest way is to compare the source and target file for any differences.

- Minus Query - Minus Queries purpose is to perform source-minus-target and target-minus-source queries for all data, making sure the extraction process did not provide duplicate data in the source and all unnecessary columns are removed before loading the data for validation.

Both of these methods are not easy to use in Bigdata world as the volume is huge. Hence we have to use different methods:

- Data Sampling - Entire data cannot be tested record by record as the volume is huge. Data needs to be tested by sampling.

- It’s very important to select the correct sample of data to ensure we have tested all scenarios. Samples should be selected to cover the maximum number of variations in the data.

- Basic Data Profiling – Another method to ensure that the data has been ingested correctly is to do data profiling example -count of records ingested, Aggregation on certain columns, minimum value and maximum value ranges etc.

- Running queries to check data correctness – Queries like grouping on certain columns or fetching top and bottom 5 can help in checking whether correct no of records has been ingested for each scenario.

Part 2 of the blog would cover the details on Business logic validation testing and Data Consumption

Part 3 of the blog would cover aspects of performance and Security Testing